AI? It’s in your inbox, your calendar, your phone… and probably soon in your toilet (there’s now even AI on refrigerators?).

But with so many AI tools popping up, how do you know which ones are actually safe to use?

That’s a real challenge for physicians, who are already managing packed schedules and nonstop mental load. Choosing the wrong tool doesn’t just waste time. It can introduce serious risk.

We’re already seeing signs of that. Earlier this year, Google quietly pulled its AI health summaries after an investigation found they were giving out misleading and potentially dangerous medical information. Experts warned that bad guidance like that could cause patients to ignore real problems or delay care.

So no, the biggest risk in 2026 isn’t avoiding AI. It’s using the wrong kind.

Let’s dig into which types of AI tools physicians should approach with caution (or avoid entirely), not just in medicine, but across investing, business, and everyday life.

Disclaimer: While these are general suggestions, it’s important to conduct thorough research and due diligence when selecting AI tools. We do not endorse or promote any specific AI tools mentioned here. This article is for educational and informational purposes only. It is not intended to provide legal, financial, or clinical advice. Always comply with HIPAA and institutional policies. For any decisions that impact patient care or finances, consult a qualified professional.

1. AI That Pretends to Think for You

One of the fastest-growing categories in AI is tools that present themselves as decision-makers.

They rank options. They generate conclusions. They offer “final answers” with confident language.

On the surface, this sounds helpful. Physicians make hundreds of decisions every day. Delegating some of that mental load feels appealing.

The problem is that these tools don’t actually think. They don’t understand your values, constraints, risk tolerance, or long-term goals. They operate on generalized patterns, hidden assumptions, and incomplete context.

This becomes especially risky when used for:

Physicians are particularly vulnerable here because expertise creates trust. When something sounds intelligent and authoritative, it’s easy to assume it’s correct.

But AI doesn’t carry responsibility for outcomes. You do. AI works best as a thinking partner, not a replacement for judgment. If a tool consistently presents outputs as “the answer” rather than inputs for your reasoning, it’s a red flag. In medicine, you wouldn’t accept a diagnosis without understanding the reasoning. AI deserves the same scrutiny.

2. AI Built on Unverifiable or Unclear Data Sources

Another category to be cautious of includes AI tools that can’t clearly explain where their intelligence comes from.

If a platform can’t answer basic questions like:

- What data was this trained on?

- Who owns the outputs?

- How are my inputs stored or reused?

That uncertainty matters. This isn’t just a medical compliance issue. It affects:

- Business content

- Internal documents

- Educational materials

- Presentations

- Intellectual property

Physicians often create valuable assets, from talks and courses to internal frameworks and written content. Using AI tools with unclear data practices can expose that work in ways you didn’t intend. Beyond ownership concerns, opaque data sources increase the risk of inaccurate or biased outputs. If you don’t know what knowledge base a tool is drawing from, you can’t properly evaluate its reliability.

A general rule of thumb: If transparency is vague, incomplete, or avoided, proceed carefully or not at all.

3. “All-in-One” AI That Tries to Do Everything

Many AI platforms promise simplicity through consolidation. One tool to manage writing, scheduling, research, finances, planning, communication, and more.

The idea is appealing, especially for physicians juggling multiple roles. In practice, these tools often do many things poorly instead of a few things well.

Common issues include:

- Shallow functionality

- Bloated interfaces

- Unreliable outputs

- High switching costs once embedded

When everything runs through one system, small failures become systemic problems. And when a tool tries to serve every use case, it rarely excels at the ones that matter most. A more effective approach is intentional modularity. Focused tools with a clear job-to-be-done tend to be more reliable, easier to evaluate, and simpler to replace if they stop serving you.

Complexity disguised as simplicity is still complexity.

4. AI That Can’t Explain Its Work

Trust in AI shouldn’t be blind (like all things?).

Some tools produce outputs without any visibility into how those results were generated. The reasoning is hidden, proprietary, or inaccessible.

This lack of auditability creates risk. If you can’t review the steps, logic, or assumptions behind an output, you can’t confidently rely on it. This becomes especially problematic when AI influences decisions with financial, legal, or reputational consequences.

Physicians are already trained to demand explainability. Lab values come with reference ranges. Imaging comes with reports. Diagnoses come with differentials. AI should meet the same standard.

Tools that allow you to inspect, edit, and understand outputs are far safer than those that ask you to “just trust the system.” If you want a practical example of how physicians can use AI without giving up judgment, see how doctors are quietly using ChatGPT like this.

5. AI That Creates Speed Without Real Leverage

Not all productivity is meaningful.

Some AI tools focus on making you faster at tasks that don’t materially move your life or work forward. They generate more emails, more posts, more content, more activity. The result is motion, not progress.

For physicians, time is not the only constraint. Attention, energy, and decision quality matter more. Speed without leverage simply accelerates burnout.

Before adopting any AI tool, it’s worth asking:

- What problem does this actually solve?

- Does it reduce friction in something that truly matters?

- Or does it just make noise faster?

Leverage comes from systems that compound effort, not tools that optimize “busywork”.

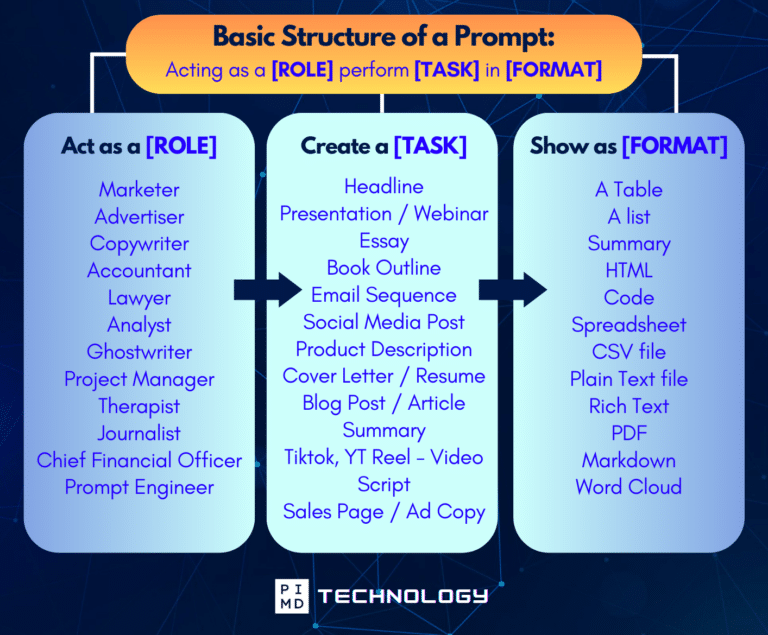

Unlock the Full Power of ChatGPT With This Copy-and-Paste Prompt Formula!

Download the Complete ChatGPT Cheat Sheet! Your go-to guide to writing better, faster prompts in seconds. Whether you’re crafting emails, social posts, or presentations, just follow the formula to get results instantly.

Save time. Get clarity. Create smarter.

How Physicians Should Evaluate AI in 2026

Avoiding bad AI tools is only half the equation. The other half is having a clear framework for evaluation.

Before adopting any AI tool, consider the following questions:

- Clarity

What specific problem does this solve? If the answer is vague, the value probably is too. - Control

Can you inspect, edit, and override outputs? Or are you locked into whatever it produces? - Context

Can the tool be meaningfully adapted to your unique situation, or is it generic by design? - Compliance and Ownership

Are data usage, storage, and output ownership clearly defined? - Leverage

Does this tool compound your effort over time, or does it just save minutes?

This framework applies whether you’re evaluating AI for clinical workflows, business operations, investing analysis, leadership planning, or personal organization.

The Real Advantage? Knowing What Not to Use.

Look, AI isn’t going anywhere. It’s only getting more powerful, more persuasive… and more confusing.

But here’s the truth: success with AI as a physician isn’t about piling on the latest tools. It’s about being intentional. Thoughtful. Selective.

You don’t need more AI, you need better AI. The kind that frees up your time, sharpens your thinking, and fits into your life without adding stress or risk.

Sometimes, the smartest thing you can do is remove the tool that’s creating noise instead of clarity. That one shift can unlock better decisions, less overwhelm, and more control.

This isn’t a call to avoid AI completely. It’s a reminder to use your clinical instincts here too: be skeptical, test carefully, and don’t assume the most popular option is the best one for you.

And you’re not alone in this. That’s what we’re here for. To help you figure out what’s actually useful, what’s worth ignoring, and how to use AI as a tool to amplify the good work you’re already doing. So keep exploring, just do it with eyes wide open. You’ve got this!

Download The Physician’s Starter Guide to AI – a free, easy-to-digest resource that walks you through smart ways to integrate tools like ChatGPT into your professional and personal life. Whether you’re AI-curious or already experimenting, this guide will save you time, stress, and maybe even a little sanity.

Want more tips to sharpen your AI skills? Subscribe to our newsletter for exclusive insights and practical advice. You’ll also get access to our free AI resource page, packed with AI tools and tutorials to help you have more in life outside of medicine. Let’s make life easier, one prompt at a time. Make it happen!

Disclaimer: The information provided here is based on available public data and may not be entirely accurate or up-to-date. It’s recommended to contact the respective companies/individuals for detailed information on features, pricing, and availability. All screenshots are used under the principles of fair use for editorial, educational, or commentary purposes. All trademarks and copyrights belong to their respective owners.

If you want more content like this, make sure you subscribe to our newsletter to get updates on the latest trends for AI, tech, and so much more.

Further Reading